SIGGRAPH 2024 in Denver, Colorado showcased a range of groundbreaking tech in the Emerging Technologies hall, highlighting the future of immersive and interactive experiences. Here’s a look at some of the standout demonstrations Instinctively Real Media experienced from this year’s conference.

The Malleable-Self Experience

A highlight of SIGGRAPH Denver was The Malleable-Self Experience where your body itself becomes the medium, is a collaborative research project between partners Enhance Experience Inc. and Keio Media Design.

The experience combines VR visuals with the Synesthesia X1 haptic chair’s full-body sensations to challenge and expand body image perceptions. It aims to alter one’s self-perception through dynamic VR body representations and multi-sensory events:

- Establishing virtual body ownership in the participant’s space.

- Separating the virtual body to hover above the physical body with added haptic feedback.

- Transforming the virtual body with synchronized visual and haptic stimuli to maintain perceptual coherence.

The system of 44 vibrating actuators and two speakers controlling the chair was developed by Enhance Experience Inc.; two works were previously showcased in Japan.

The exhibition was made possible through joint research with Dr. Minamizawa’s lab at Keio University and Embodied Media led by Project Researcher, Tanner Person in Japan.

This innovative project won the Audience Award at this year’s Emerging Technology showcase presented by Tanner Person, Nobuhisa Hanamitsu, Danny Hynds, Sohei Wakisaka, Kota Isobe, Leonard Mochizuki, Tetsuya Mizuguchi, Kouta Minamizawa. It blends sensory inputs to create a deeply immersive experience, illustrating the potential of multi-sensory integration in digital media.

Ultraleap’s Latest Hand-Tracking Advances Spacial Interactions

Renowned for its hand-tracking technology, Ultraleap impressed attendees with their latest developments in event sensor-based hand tracking.

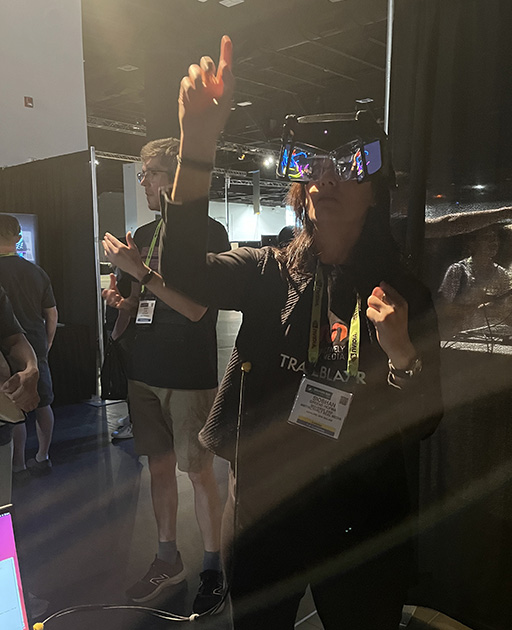

Instinctively Real Media Siobhán Greene Hofma gets hands on in XR with Ultraleap

Their advanced systems offer enhanced precision and responsiveness, significantly improving user interactions in both virtual and augmented reality environments.

Ultraleap is introducing a new way to track hand movements using special event cameras on AR glasses. These cameras are different because they don’t rely on traditional imaging that needs a lot of power. Instead, they work efficiently and can help make AR glasses more practical and powerful. This technology promises to make digital experiences more intuitive and engaging.

KineSway Kajimoto Lab’s Haptic Innovations

The KineSway by Kajimoto Laboratory presented their latest research in haptic feedback technology. Their work focuses on enhancing the realism and responsiveness of touch sensations in digital environments, providing more immersive and realistic virtual experiences. Their ankle strap-on prototypes demonstrate significant advancements in creating lifelike ground movement interactions.

In video games, virtual reality, and interactive experiences, motion platforms are used to simulate the feeling of the ground moving or tilting. However, these systems are often expensive and take up a lot of space. To address this, they suggest using a different approach that relies on creating the sensation of ground movement through vibrations. By applying vibrations to specific tendons in the ankle and combining this with visual cues, the system mimics the feeling of the ground swaying or tilting without actually moving the ground. This method is safer, easier to install, and more affordable than traditional motion platforms.

Google Starline Project: The Magic Window to Another’s Reality

Starline was the recipient of the SIGGRAPH 2024 DCAJ Award. A groundbreaking telepresence system that sets a new standard for virtual meetings and a new way to have video calls that looks and feels incredibly realistic, much better than regular video calls. Unlike traditional 2D video conferencing, Starline delivers a photorealistic experience that has been proven to excel in participant ratings, meeting recall, and non-verbal communication. The system integrates cutting-edge technologies, including advanced face tracking, real-time 3D image generation, spatial audio, and high-resolution displays. This combination creates a remarkably lifelike and immersive sense of being in the same room with engaging interactions, a level of realism that conventional video calls simply can’t match. The project showcases Google’s commitment to bridging the gap between virtual and physical presence, providing a truly immersive communication experience.

WaterForms Multi-Sensory Feedback

One captivating innovation was the Water Forms Multi-Sensory Feedback technology and VR experience by researchers at National Taipei University of Technology, Taiwan. This innovative system leverages water to deliver real-time, multi-sensory interactions. Delivering multiple sensory inputs simultaneously in virtual environments remains a significant challenge, particularly in maintaining the immersive quality experienced in the real world.

To address this, WaterForm is an innovative liquid-transformation system designed to enhance virtual experiences through multi-sensory feedback. WaterForm uses liquid to simulate a range of sensations, including splashes, flowing water, gravity, wind, resistance, buoyant forces, mechanical energy, and mist. This system aims to deepen immersion in virtual environments. The demonstration featured a VR journey through a virtual East landscape, designed to explore self-awareness through engaging storytelling. By combining touch, sound, and visuals, this technology provides a unique and immersive experience, exploring digital interaction and sensory engagement

Return to TrailblaXR Magazine

Discover more from Instinctively Real Media | Global Creative Agency

Subscribe to get the latest posts sent to your email.