Emil Nidal | Technical Animator, Motion Capture, Real-Time Workflows

SØRENGE

With multiple blockbuster movie credits to his name for his work on the likes of Wonka, Doctor Strange, Venom and Amazon Prime TV series, The Lord of the Rings: The Rings of Power, Emil Nidal’s career in virtual production, motion capture and real-time now finds him in the world of video games as Technical Animator

Great to have you join TrailblaXR, please introduce yourself and what are your specialities?

Hello, I’m Emil Nidal and my speciality is within the realm of virtual production, realtime workflows, and motion capture. Currently, I am working as a Technical Animator.

A Technical Animator knows how to create rigs, skeletons, and skinning for 3D models like characters, creatures, vehicles, props, as well as automate tasks with a scripting language or blueprints. Software could be any combination between a DCC (Digital Content Creation) tool like Autodesk Maya, MotionBuilder, Houdini, Blender and a game engine like Unreal Engine or Unity and of course version control systems like Helix P4V.

Moreover, such technical roles whether a technical animator, technical artist or any discipline technical director, would often be needed in artist support and pipeline. For virtual production or motion capture roles it involves also having specialist software for the specific capture system and some hands-on work on cameras, suits, or other physical items.

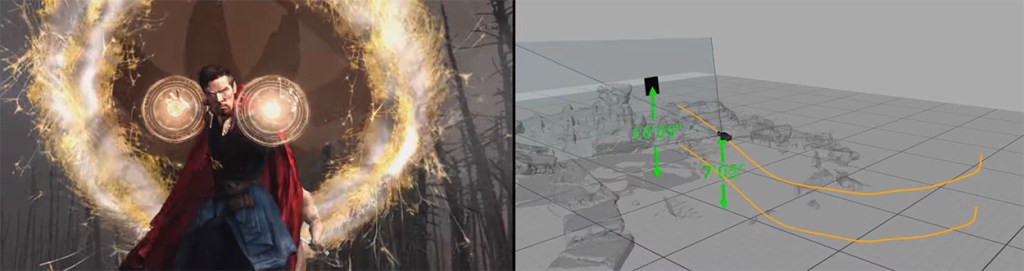

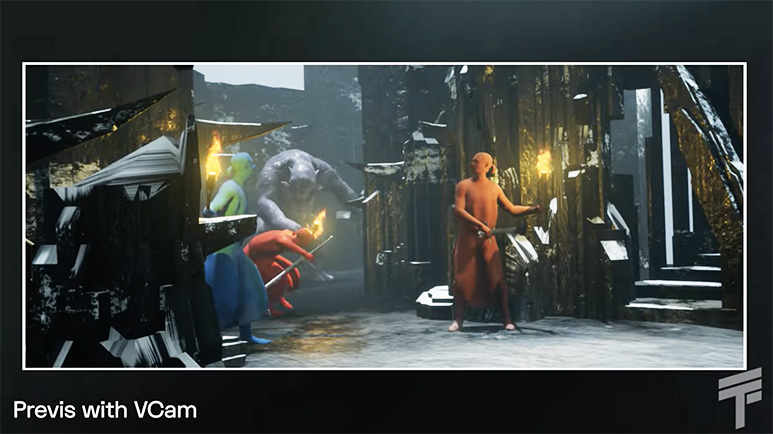

The Third Floor utilising motion capture for virtual production to capture realistic body performance of the actor and retarget movements to digital counterparts.

You’ve worked in animation, virtual production, VFX and now games. Tell us a little bit about your experiences and explain how cross-transferrable your skills have been within these industries.

The common parts between each of the roles I have worked in are DCC to engine pipelines.

The tools needed to transfer animation created in Maya for visualisation or a video game are more or less the same. In all scenarios you have animation in a DCC tool like Maya or MotionBuilder and you need it to work in a game engine like Unreal Engine or Unity. Regardless of making it work live for virtual production or for post production like in traditional animation or games, it still uses the same methods and principles.

EMIL NIDAL | TECHNICAL ANIMATOR

Some cross-transferable skills are adaptability, prototyping, troubleshooting. Pretty much for all my roles I had to do some type of R&D, propose a prototype, work with developers to make a tool or propose a workflow for artists. Another example would be identifying issues and providing solutions to prevent bottlenecks.

The Third Floor Virtual Visualization Series

What successes have you had professionally and what’s next for you?

My successful role as a Techvis Lead for Doctor Strange in the Multiverse of Madness meant I can be reliable for such work. Alongside other shows where I participated as a lead and delivered the required work within deadlines. Running things live like virtual camera sessions or location scouting with VR headsets or iPads for filmmakers on film productions like Venom: Let There Be Carnage or The Lord of the Rings: The Rings of Power is another example of successful work.

Part of my toolkit has been The Third Floor’s Pathfinder VR app, as well as Framestore’s fARsightGo AR app, both are proprietary virtual scouting platforms which I used during the production of Wonka, Venom 2, and The Midnight Sky.

What I am looking forward to is expanding my skill set. I want to write more tools, pipelines and experiment with new emerging techniques. I also enjoy mentoring junior artists and returning the favour I received when I was at the beginning of my career.

How critical is realistic 3D character performance for great player experiences during gameplay and how is this achieved for game characters?

Depending on the specific game style, realistic performances could be required for an AAA game. Such productions would utilize actor scanning, motion capture and complex deformation systems.

Can you talk about some of the most exciting projects you’ve worked on within your career?

It was quite exciting to run a motion capture of an actor on the film set of Jingle Jangle with live compositing by Ncam. The performer was acting as a 12 inch puppet using Xsens suit and retarget streamed live via MotionBuilder with Ncam to Unreal Engine 4 in 2019. This was the first show we used the Ncam plugin for UE4 in 2019 and it was quite challenging. We had some scenes where the actor would need to perform on a scaled up tape marked on the floor while looking at a screen of the currently filmed scenes.

Briefly explain what attracted you to forge a path in the games industry and how you got there?

I was curious to learn about the challenge of making a technical animation pipeline for a game as the projects are quite different. In Film/VFX a project would have a timeline length around a few months to a year and a half, while a video game would take anywhere from 3 to 5 years or even more depending on the case.

I guess I started preparing at university by training to be a rigger, then my first job working as a motion capture technician taught me about what animation games would need and all the live parts of technical animation too. After that I worked as a Technical Artist for an animation software company supporting game studios and showed me some great animation solutions. Then I worked as a Virtual Production Technical Director for The Third Floor and Framestore where I supported visualisation artists who would come up and animate, not only film scenes but individual character animations as well.

Which animation pipeline tools and technologies do you rely on day-to-day; what are the key qualities they deliver?

The base of animation pipelines would be able to export animation from a DCC software like Maya to a game engine like Unreal. In this case you would need an animation exporter, IK/FK (inverse kinematics and forward kinematics) transfers, space switching, and of course a reliable rigging pipeline with procedural rig building and… many other things depending on the project needs in order to enable animators to animate efficiently. To add up I would suggest some sort of Batch process for operation – it is always super handy. Such processes deliver key qualities like – flexibility, you need to be able to reiterate quickly on your rigs/skeletons.

Have you tried out Apple Vision Pro and what are your thoughts on engagement value and adoption of XR or Spatial Computing across Media & Entertainment?

I have not tried the Apple Vision Pro yet but I am curious to see how it would change people’s perception of XR and what is coming up next. I have used mainly HTC VIVE Pro and Oculus and having worked with VR and AR in the past, I can only look forward to the future adopting more XR tech. I created packaged Unreal apps in AR with iPads for things that could be achieved with Apple Vision Pro due to the same features with iOS.

In your opinion, has AI and Machine Learning dramatically improved animation processes?

Machine Learning and AI have improved animation processes by enabling Motion Matching techniques to help create high quality and complex character animation. At this stage of AI results I could see an improved animation process for indie and student projects. For example now there are many “Mocap AI” solutions that only need normal cameras to work, no need for sensors, specific cameras and suits. This enables non mocap professionals to quickly test and try things! But this does come with a price as the principles are the same as with traditional optical mocap using retroreflective markers. If you want quality and coverage you have to increase your number of cameras, pay for specific licenses, etc.

What do you think are the most exciting trends across the industry now and in the future?

An exciting trend is using game engines to produce animations or other linear content. Would be happy to see artists animating in Unreal in the future.

What are your most memorable career moments?

I feel my most memorable career moments are related to going to a remote destination for a shoot. Lyme Regis’s harbour has stunning sunrises and makes you forget you are in the UK. During the filming of Wonka in St. Paul’s Church in London it was quite funny to see Rowan Atkinson being chased by a full scale giraffe puppet. But definitely my most notable moment would be flying out to New Zealand to work on The Lord of the Rings: The Rings of Power, Season 1. End of February 2020 I was sent on a dream project (since I am a big Tolkien nerd) and two weeks later the global Covid pandemic was announced. This happened around 4 years ago now and it was memorable for many reasons. It was a big virtual production show and perhaps due to the down time during Covid lockdowns we had the time to visualise pretty much the whole length of the first two episodes, some scenes I ended up working through 3, 4 stages like: Previs, Postvis, Techvis, Virtual camera or Virtual Location scouting. That was an experience unlike any other production. The full utilisation of Virtual Production comes when your team is integrated early in the pre-production and it is working closely with the Art Department to visualise the Director and Cinematographer’s vision.

Is there anything else you’d like to share with your audience?

When building a new pipeline for any DCC tool, it is important to spend some time designing it to be reusable. Pipeline tools are usually written in Python and this gives the opportunity to make them reusable to a certain extent. Imagine if you have to create the same tool for two different DCC softwares like Maya or Max, you could reuse a part of the code as the principles would be the same.

Thank you Emil for contributing to TrailblaXR, keep on inspiring and trailblazing the games and virtual production industry!

Image Credits: The Third Floor.

Return to TrailblaXR Magazine

Become a future TrailblaXR of the multiverse and shine under the starlight with Instinctively Real Media.

Let Instinctively Real Media promote your brand, contact Enquiries@InstinctivelyReal.com for a chat.

Discover more from Instinctively Real Media | Global Creative Agency

Subscribe to get the latest posts sent to your email.